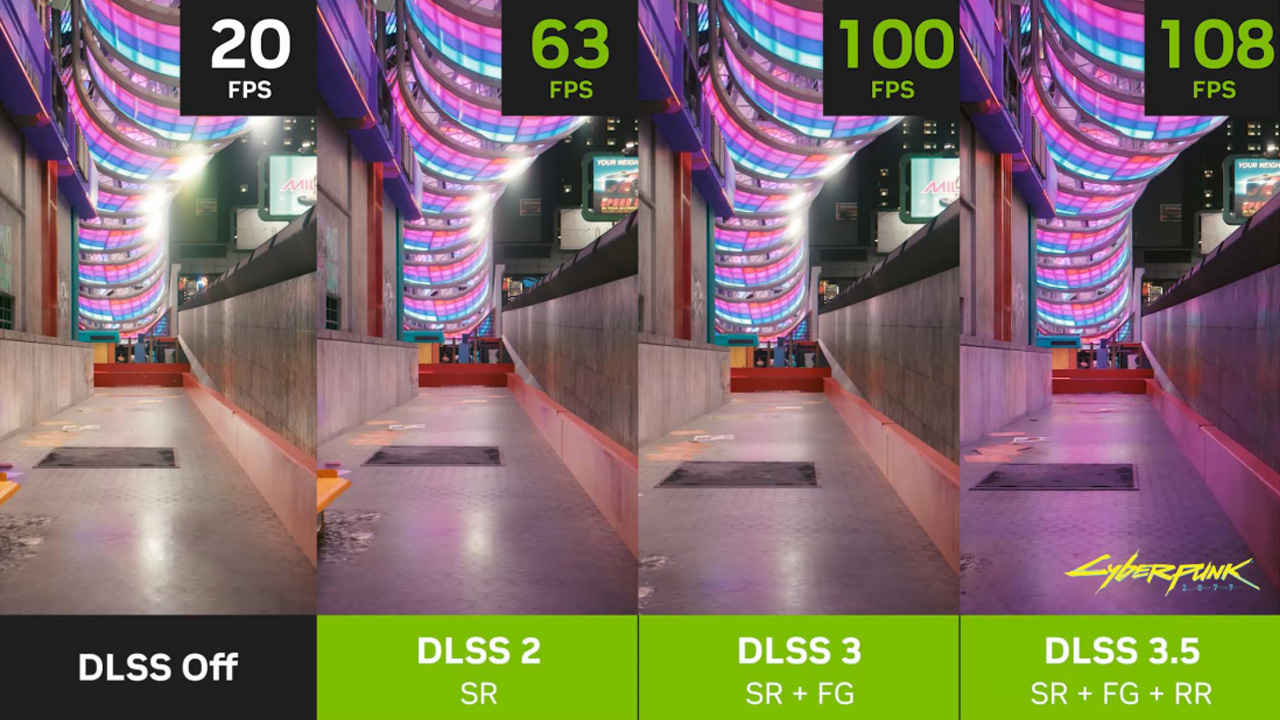

NVIDIA unveils DLSS 35 with new Ray Reconstruction feature, heres how it works

NVIDIA, at Gamescom 2023, unveiled a new AI-powered addition to its DLSS feature which promises to improve the visual fidelity of ray-traced images. The new feature is called Ray Reconstruction and will replace hand-tuned denoisers which have been traditionally used for improving the quality of images. The new Ray Reconstruction feature will work on all GeForce RTX GPUs released by the company to date. Several games such as Alan Wake 2, Cyberpunk 2077 and Portal with RTX are expected to incorporate DLSS 3.5 in the coming months. If you’re wondering what a denoiser is or how this new Ray Reconstruction feature helps, then read on.

“Fully ray-traced lighting in real-time was a significant milestone on our journey to photorealistic graphics in games, and we met those goals with Cyberpunk 2077’s RT Overdrive Mode, Minecraft with RTX, and Portal with RTX. With the addition of Ray Reconstruction in NVIDIA DLSS 3.5, developers are aided by AI to enhance the worlds they create by increasing image quality further than previously thought possible.”, said Matt Wuebbling, VP of Marketing, NVIDIA.

NVIDIA Ray Reconstruction

Hand-tuned denoisers can do a pretty great job at filling in the right information and helping remove noise. However, when you try and upscale an image that has been denoised, then you will easily notice that a denoiser has been used. Edges of objects will be off, and reflections and shadows will also appear a little wonky. Unfortunately, DLSS pretty much requires upscaling. So when images are generated using DLSS and upscaled, the weird artefacts of using a denoiser, which otherwise would have not caught your attention, do pop out. This is why NVIDIA needs a denoiser that can actually factor in the upscaling. This is where ray reconstruction comes into the picture.

Ray reconstruction takes into account the ray-tracing information and also factors in motion vectors. So, if an object within a game is moving from left to right in front of your eyes, then that information helps the engine better generate the right colours for each pixel. Once that’s done, an image is created by the ray reconstruction feature. Once the next sequential image is generated, there’s a little feedback loop to help the ray reconstructor feature to perform temporal filtering as well. After this point, you have NVIDIA’s Optical Flow Accelerator and Frame Generator features play their part. Everything from this point remains the same as DLSS 3.1

Here’s an image sample to show an upscaled image with DLSS turned off and switched on. The former image results in the shadows disappearing simply because there wasn’t enough information for the upscaler to even know if there was a shadow in the first place.

What is a denoiser?

If you’ve ever taken a photo under low-light conditions, then you might have noticed grainy specks on the photo, especially in the dark regions of the photograph. Camera sensors can capture the most amount of details when there’s plenty of light incident on the sensors, so a brightly lit image looks clear since the sensor can pick up on all the details. When there’s less light, the sensor cannot see a lot of the details and ends up making do with whatever data it has. Have a look at the image below and the section that we have zoomed in on.

Those little white grains in the dark regions, that’s noise. Basically, it’s a mix of useful and unwanted information. We aren’t getting into why this happens in detail, that’s for another article. In order to remedy this problem, we’ve built technologies to approximately gauge what is useful data and use that to create clear images.

In computer graphics, denoisers work towards improving the quality of a generated image by focusing on three different kinds of data or signals. These are:

Diffusion

Reflection

Shadows

These are three different ways in which light interacts with objects in an image. Diffusion is all about light getting reflected in all directions after hitting an object. Reflections deal with light bouncing off in a single direction and shadows are when there’s little light going in a certain direction.

When ray tracing happens in video games, the graphics card has to calculate how each ray of light interacts with its environment. And each image has hundreds of thousands of rays being tracked. Getting a photorealistic image would require millions of rays being computed but for the sake of time, only a relatively small set of rays are used for generating an image. The image below will show how a ray-traced image appears if only one ray is used per pixel.

This is similar to taking a low-light photograph and the resultant image will have a lot of noise due to insufficient data. So we get an image with weird grains of differently coloured pixels because there’s not enough information to figure out the colour of each pixel. A denoiser looks at this image and tries to remove noise by approximating the actual pixel colour.

Denoisers can look at neighbouring pixels and fill in the gaps. This is called spatial filtering. They can also take two consecutively generated frames and determine if there are corrections that can be made depending on which frame managed to properly generate the right pixel data. That’s called temporal filtering. Here’s the same image as before with a denoiser added.

If not for spatial and temporal filtering, you can also use AI models that have been trained for endless hours to figure out what the right colour is for a particular pixel. That’s the route that the new Ray Reconstruction feature in DLSS 3.5 takes.