Beware of the AI impostor scam that cost Indians lakhs of rupees

Imagine your sibling, children, or grandchildren calling you and asking for help. They’re stuck in an unfriendly situation and need money to get out. What will you do? Paying up will be your first thought, but you need to be more rational, and here’s why.

McAfee recently published a cybersecurity AI report that talks about the artificial intelligence impostor. Scammers are cloning people’s voices, and calling their relatives to ask for money to get out of a tight spot. According to this report, there are two reasons for Indians to worry about this scam.

First, out of the eight countries in this report, India gets the spot of the most scammed country. Second, Indians are among the most active people on social media who get hit by the AI impostor scam. We read the full report, and here are some things you need to know about the scam, and how to save yourself from the AI impostor scam.

What is an AI impostor scam and how it works?

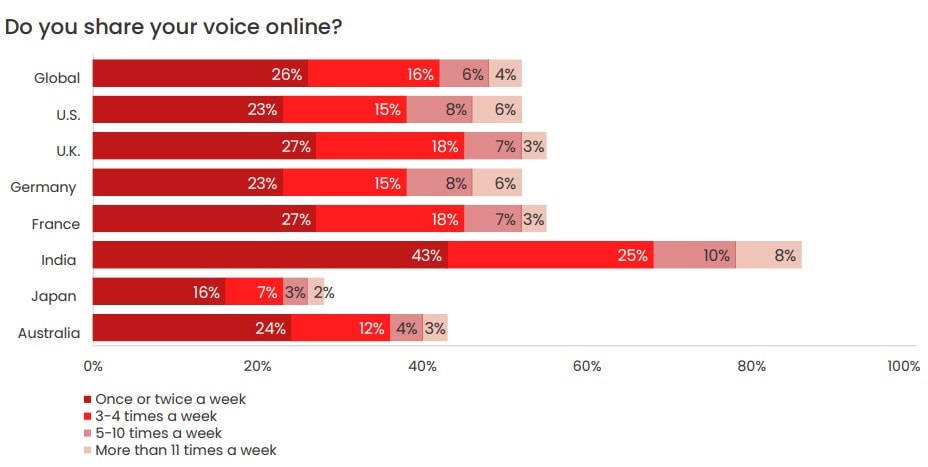

The McAfee report says that forty-three percent of surveyed Indians share their voice online at least once a week. It includes reels, shorts, and other videos that may have the uploader’s voice. Scammers use samples of the audio from your videos to make a convincing clone of your voice.

Now that they have your voice, they will send a voice note to your relatives/friends and ask them for financial help. They could also clone your relative or friend’s voice and ask you for help. And the report says that twenty percent surveyed of Indians have received such notes and twenty-seven percent know others who have received similar calls/notes. This is by far the highest number in the world.

The most common scenarios include a car accident, theft of wallet and phone, or the said relative being on a vacation abroad and in need of help. More than sixty percent of surveyed Indians were ready to send money to a relative if they received a voice note from one of these situations.

Moreover, the report says that seventy-seven percent of people who received such notes were scammed out of money. More than a third of them lost more than $1000 (Rs. 82,000). Also, seven percent of people lost between four lakh rupees ($5000) to twelve lakh rupees ($15000)

This is where you have to be smart and not act purely on your emotions. Here are some things that can help you verify if it is actually a relative or friend in distress, or someone trying to scam you.

How to save yourself from artificial intelligence impostors and AI voice clones

One of the best practices according to the McAfee report is to keep social media accounts private. However, in this day and age, that may not be possible for many of us. So here are some more practices you can follow to keep yourself safe from AI voice clones.

Set a codeword with your immediate family. If you receive a distress call from an unknown number claiming to be a family member, this code can help you verify their identity.

Ask them something only you know. This can include something like the name of another close relative, someone’s birthday, or anything else just you and the person calling you know. If it is a scammer, they will be thrown off-guard by this.

Don’t rush and send the money. Scammers are counting on your emotions to rush you into sending the money. Check the bank account details, the number from which they’re calling, or the account that reached out to you.

Avoid unknown callers. This is kind of a golden rule in the scam-age. Avoid entertaining any strange calls asking for money, OTPs, KYCs, and so forth.

Get an identity protection service if you frequently post online, have a public account, and can afford to shell out ten to twelve thousand rupees ($150). This is the most effective way to detect if your information is leaking on the dark web.

When we call it the golden age of AI, remember it is the golden age for scammers using AI too. Be careful on the internet, and keep reading Techlusive to stay up-to-date on everything tech.

The post Beware of the AI impostor scam that cost Indians lakhs of rupees appeared first on Techlusive.

Imagine your sibling, children, or grandchildren calling you and asking for help. They’re stuck in an unfriendly situation and need money to get out. What will you do? Paying up will be your first thought, but you need to be more rational, and here’s why.

McAfee recently published a cybersecurity AI report that talks about the artificial intelligence impostor. Scammers are cloning people’s voices, and calling their relatives to ask for money to get out of a tight spot. According to this report, there are two reasons for Indians to worry about this scam.

First, out of the eight countries in this report, India gets the spot of the most scammed country. Second, Indians are among the most active people on social media who get hit by the AI impostor scam. We read the full report, and here are some things you need to know about the scam, and how to save yourself from the AI impostor scam.

What is an AI impostor scam and how it works?

The McAfee report says that forty-three percent of surveyed Indians share their voice online at least once a week. It includes reels, shorts, and other videos that may have the uploader’s voice. Scammers use samples of the audio from your videos to make a convincing clone of your voice.

Now that they have your voice, they will send a voice note to your relatives/friends and ask them for financial help. They could also clone your relative or friend’s voice and ask you for help. And the report says that twenty percent surveyed of Indians have received such notes and twenty-seven percent know others who have received similar calls/notes. This is by far the highest number in the world.

The most common scenarios include a car accident, theft of wallet and phone, or the said relative being on a vacation abroad and in need of help. More than sixty percent of surveyed Indians were ready to send money to a relative if they received a voice note from one of these situations.

Moreover, the report says that seventy-seven percent of people who received such notes were scammed out of money. More than a third of them lost more than $1000 (Rs. 82,000). Also, seven percent of people lost between four lakh rupees ($5000) to twelve lakh rupees ($15000)

This is where you have to be smart and not act purely on your emotions. Here are some things that can help you verify if it is actually a relative or friend in distress, or someone trying to scam you.

How to save yourself from artificial intelligence impostors and AI voice clones

One of the best practices according to the McAfee report is to keep social media accounts private. However, in this day and age, that may not be possible for many of us. So here are some more practices you can follow to keep yourself safe from AI voice clones.

Set a codeword with your immediate family. If you receive a distress call from an unknown number claiming to be a family member, this code can help you verify their identity.

Ask them something only you know. This can include something like the name of another close relative, someone’s birthday, or anything else just you and the person calling you know. If it is a scammer, they will be thrown off-guard by this.

Don’t rush and send the money. Scammers are counting on your emotions to rush you into sending the money. Check the bank account details, the number from which they’re calling, or the account that reached out to you.

Avoid unknown callers. This is kind of a golden rule in the scam-age. Avoid entertaining any strange calls asking for money, OTPs, KYCs, and so forth.

Get an identity protection service if you frequently post online, have a public account, and can afford to shell out ten to twelve thousand rupees ($150). This is the most effective way to detect if your information is leaking on the dark web.

When we call it the golden age of AI, remember it is the golden age for scammers using AI too. Be careful on the internet, and keep reading Techlusive to stay up-to-date on everything tech.

The post Beware of the AI impostor scam that cost Indians lakhs of rupees appeared first on Techlusive.

Imagine your sibling, children, or grandchildren calling you and asking for help. They’re stuck in an unfriendly situation and need money to get out. What will you do? Paying up will be your first thought, but you need to be more rational, and here’s why.

McAfee recently published a cybersecurity AI report that talks about the artificial intelligence impostor. Scammers are cloning people’s voices, and calling their relatives to ask for money to get out of a tight spot. According to this report, there are two reasons for Indians to worry about this scam.

First, out of the eight countries in this report, India gets the spot of the most scammed country. Second, Indians are among the most active people on social media who get hit by the AI impostor scam. We read the full report, and here are some things you need to know about the scam, and how to save yourself from the AI impostor scam.

What is an AI impostor scam and how it works?

The McAfee report says that forty-three percent of surveyed Indians share their voice online at least once a week. It includes reels, shorts, and other videos that may have the uploader’s voice. Scammers use samples of the audio from your videos to make a convincing clone of your voice.

Now that they have your voice, they will send a voice note to your relatives/friends and ask them for financial help. They could also clone your relative or friend’s voice and ask you for help. And the report says that twenty percent surveyed of Indians have received such notes and twenty-seven percent know others who have received similar calls/notes. This is by far the highest number in the world.

The most common scenarios include a car accident, theft of wallet and phone, or the said relative being on a vacation abroad and in need of help. More than sixty percent of surveyed Indians were ready to send money to a relative if they received a voice note from one of these situations.

Moreover, the report says that seventy-seven percent of people who received such notes were scammed out of money. More than a third of them lost more than $1000 (Rs. 82,000). Also, seven percent of people lost between four lakh rupees ($5000) to twelve lakh rupees ($15000)

This is where you have to be smart and not act purely on your emotions. Here are some things that can help you verify if it is actually a relative or friend in distress, or someone trying to scam you.

How to save yourself from artificial intelligence impostors and AI voice clones

One of the best practices according to the McAfee report is to keep social media accounts private. However, in this day and age, that may not be possible for many of us. So here are some more practices you can follow to keep yourself safe from AI voice clones.

Set a codeword with your immediate family. If you receive a distress call from an unknown number claiming to be a family member, this code can help you verify their identity.

Ask them something only you know. This can include something like the name of another close relative, someone’s birthday, or anything else just you and the person calling you know. If it is a scammer, they will be thrown off-guard by this.

Don’t rush and send the money. Scammers are counting on your emotions to rush you into sending the money. Check the bank account details, the number from which they’re calling, or the account that reached out to you.

Avoid unknown callers. This is kind of a golden rule in the scam-age. Avoid entertaining any strange calls asking for money, OTPs, KYCs, and so forth.

Get an identity protection service if you frequently post online, have a public account, and can afford to shell out ten to twelve thousand rupees ($150). This is the most effective way to detect if your information is leaking on the dark web.

When we call it the golden age of AI, remember it is the golden age for scammers using AI too. Be careful on the internet, and keep reading Techlusive to stay up-to-date on everything tech.

The post Beware of the AI impostor scam that cost Indians lakhs of rupees appeared first on Techlusive.